There’s a lot of talk about 'AI governance' these days—and for good reason. With the executive-level focus on AI and Generative AI adoption and the rapid emergence of AI-specific regulations like the EU AI Act or standards like ISO 42001, enterprises don’t have time to waste. Any organization building, buying, or using AI needs to start setting up processes, systems, and tools to effectively manage the risk and compliance of their AI systems, and not fall behind.

But starting can be daunting.

New concepts pop up every day, and it's easy to get overwhelmed and confused by the sheer number of tools that seem to offer similar functions in the market, but that actually don’t have the same value proposition.

Where to start? Fret not! We are here to help.

Drawing from our own experience leading AI governance by enabling organizations to implement effective AI governance strategies and our role actively shaping the ecosystem, we at Credo AI want to cut through the noise and provide you with the clarity you need to understand once and for all what 'AI governance’ is and how it differs from other concepts and tools that may sound similar, but are not.

Clarity here is critical to ensure your organization is set up for success—and is taking everything into consideration in order to ensure your AI is fully governed from A to Z.

MLOps, Model Risk Management, Privacy workflows—they're all essential components, but they're not substitutes for AI governance. Let’s understand why!

Breaking the Mold: Why Existing Processes Won't Cut It for AI Governance

Today, many large enterprises already have various technology-related governance processes in place, such as cybersecurity and privacy workflows, data governance processes, or model risk management functions. And many organizations that are just getting started with AI governance find themselves wondering, “Can I retrofit something that my organization is already doing to address my AI governance needs?”

The answer is simple: no.

The reality is that if your organization wants to manage AI risk and comply with AI-specific laws and regulations, you are going to need a new process that deals with the specific challenges and requirements of AI governance.

What makes AI governance different from data privacy, cybersecurity, and other existing governance processes?

1. The bigger picture: AI governance includes governance of datasets, models, and AI use cases.

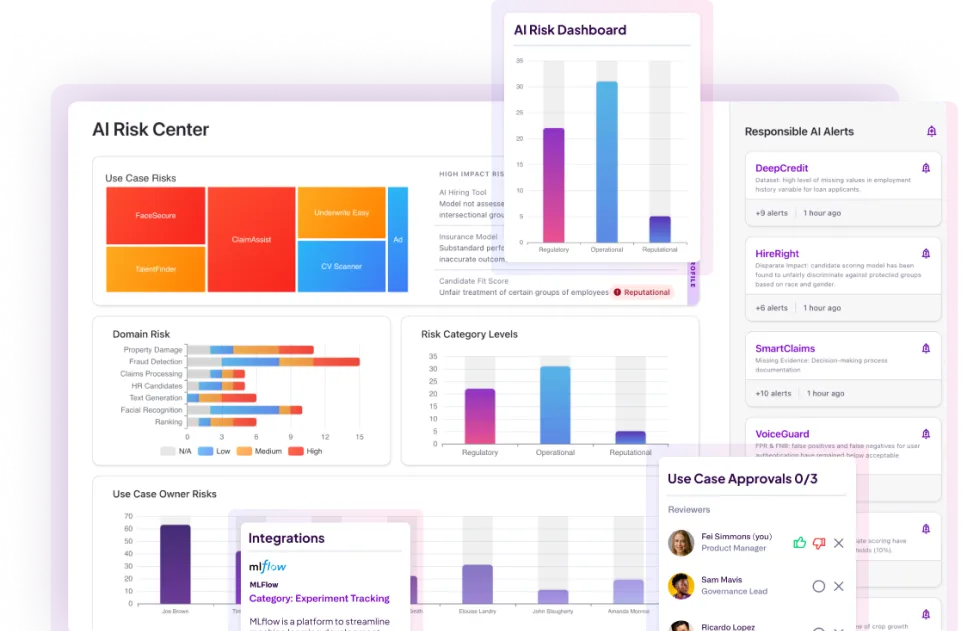

AI governance is about establishing oversight and guardrails over all of the components of AI—not just models, but also the datasets that are used to train and test those models. Perhaps the most critical component of an AI system for the purpose of governance, however, is the use case—the context of where, how, and why the model(s) will be deployed. AI governance is the “control center” that oversees everything!

Data privacy and model risk management existing governance processes do not effectively account for all of the entities—use cases, models, and datasets—involved in AI governance. Data governance and privacy workflows focus on data, helping organizations manage data usage and access, consent, and sharing: not AI.

Model risk management and MLOps workflows manage risk related to models, with a focus on technical risks related to robustness and performance issues. But neither of these two separate practices address use cases, nor are they set up to bring together these different entities to provide a “big picture” view of risk and compliance of an AI system.

For effective AI governance, you must have a system of record that tracks use cases, models, and datasets for every AI system or product in use across your organization; and the use case should define governance requirements for the models and datasets, based on laws, regulations, and standards. This can only be done with specific AI governance processes.

2. Adaptability: Enterprise AI governance programs must support governance of highly flexible 3rd party AI models and applications.

Before the advent of large language models, many organizations were focused on building all or many of their own models in-house; these classical machine learning models were trained on proprietary data, purpose-built for the specific application into which they were going to be deployed, and organizations had a high level of control over these models from a governance perspective.

Today, however, most organizations are planning to leverage third party models for generative AI use cases, and these third party models are not purpose-built but instead designed to be highly flexible and deployed in many different contexts. For example, GPT-4 could be used to power an internal document search tool for employees, and it could also be put inside an application designed to help doctors evaluate patient data and make diagnostic decisions; even though GPT-4 is the underlying model in both of these use cases, the governance requirements and appropriate guardrails are very different for these two systems.

Governing highly flexible third party models—ensuring that their risks are effectively managed, and that they are deployed in a compliant and safe manner—presents a new set of challenges for enterprises, which traditional model risk management and technical governance workflows are not equipped to address.

Model risk management and MLOps-driven governance processes are not designed to support defining different governance requirements for the same model depending on where and how it’s being used. They are also not designed to support managing governance of models that are developed externally; these technical governance approaches often require that you have full control over model design, development, and training—which is not the case when you’re working with a third party LLM.

Existing governance processes that are focused only on managing the risks of AI that have been developed internally are not going to adapt well to the new needs of AI governance in a generative AI world.

Instead, you need a flexible governance process that enables you to assess and mitigate risks even when you are leveraging a model that was developed by somebody else; and that enables you to define governance requirements based not just on which model is being used, but also the context—where, how, and why that model will be deployed.

3. Tech Meets Business: AI governance must be integrated with technical and business infrastructure.

An effective AI governance process defines governance requirements for your AI use cases, models, and datasets; in order to understand whether those requirements are being met by an AI system, you need to evaluate both technical and documentation evidence.

A mature AI governance process is tightly integrated with the tools and workflows through which your AI teams are already generating evidence. This reduces the manual effort of providing evidence for governance, which in turn supports adoption of the AI governance process throughout the enterprise. For example, if your AI governance tooling is tightly integrated with Confluence and Jira, where teams are documenting the AI systems they’re building, and with your AI deployment infrastructure, where model monitoring metrics are being calculated, and can automatically extract relevant evidence from these systems. Technical teams don’t have to manually upload documentation or metrics to governance teams for review - the information just flows leading to a seamless oversight process.

Because AI governance touches such a wide set of entities, your AI governance program is going to need to be tightly integrated with a wide set of tools. This includes more than just your technical infrastructure, where model assessments and monitoring are taking place. It also encompasses existing governance tools like your data privacy management system, data governance tooling, and cybersecurity systems, as well as project management, where AI use cases are being defined and put on the roadmap.

Again, existing governance processes have their strengths and weaknesses when it comes to integrations—model risk management is often tightly integrated with technical model assessment tooling, while data governance is often integrated with your data infrastructure and systems of record. But none of your existing governance processes are going to have the breadth of integrations across all of the tools and evidence needed to bring insight into AI risk and compliance.

4. It is all about AI: AI governance requires AI-specific risk and compliance content—risk scenarios and controls.

Another thing that sets AI governance apart from your existing governance processes are the kinds of risks and compliance requirements that your AI governance program needs to address, which are—this may seem obvious—AI-specific.

Your existing security, data privacy, and enterprise risk management workflows are not going to effectively account for AI-specific risks like the risk of adversarial attacks on an AI system, or the risk of generating copyright infringing content through use of an LLM. And your existing compliance processes are not going to enable you to evaluate compliance against emerging AI-specific laws like the EU AI Act, New York City LL144, or Colorado SB 21-169.

A new library of risk scenarios and controls is required to support your AI governance efforts—one that is tailored to the specific risks of AI systems. And this library of risk scenarios and controls must cover a comprehensive set of possible risks, including fairness, robustness, security, privacy, and transparency. Narrowly focusing on one risk area—such as privacy or security—will only address one of the many different kinds of risks that your AI governance program must tackle.

This is again where your existing governance processes are going to fall short; your data privacy program cannot effectively support management of the risk of hallucinations in your LLM-based applications, and your cybersecurity program cannot help you evaluate the risk of harmful bias in your AI-powered applications. Bringing together these different risks into a single pane of glass is critical for ensuring comprehensive risk management throughout the AI lifecycle.

AI governance is quite different from the existing workflows that your organization already has in place for data governance, privacy, and cybersecurity. The tools that you’re using for these existing governance processes each have their own strengths—and each have their relevant intersections into the AI governance process—but none is going to be able to fully support you in your AI governance journey.

Organizations need governance workflows specific to AI and any company buying, deploying or using AI should consider investing in AI governance tools.

If you’re looking for a solution that can bring together all of the work that your organization is already doing across these existing domains into a single pane of glass, purpose-built for AI governance, the Credo AI Platform is the solution for you.

Reach out to our team and learn how we can support you with all the steps of AI governance!

DISCLAIMER. The information we provide here is for informational purposes only and is not intended in any way to represent legal advice or a legal opinion that you can rely on. It is your sole responsibility to consult an attorney to resolve any legal issues related to this information.

-modified.webp)

.webp)