Papers in this issue:

- Evaluating the Social Impact of Generative AI Systems in Systems and Society

- Model evaluation for extreme risks

Welcome to Paper Trail, Credo AI’s series where we break down complex papers impacting AI governance into understandable bits. In this first post, we're diving into two papers that talk about AI risks and how we can start to handle them.

Summary

The key idea here is, “You can’t manage what you don’t measure”. As the capabilities of AI systems improve, the challenge of evaluating their growing risks and impacts becomes more pressing. The first step is knowing what to evaluate. These two papers offer two different and complementary perspectives on this question.

Key Takeaways

- As AI systems grow in complexity and impact, new evaluations are needed to deal with the evolving risk landscape.

- These two papers present taxonomies of risks focused on two critical areas: (1) impacts to people & society and (2) extreme risks.

- While each risk requires its own evaluations and mitigations, building in a robust, repeatable process of evaluation discovery, implementation and application will be critical for developing the next generation of AI systems safely.

Recommendations to Policy Makers

- Invest in the ecosystem for external safety and societal evaluation and create venues for stakeholders to discuss evaluations.

- Track the development of dangerous capabilities by establishing a formal reporting process and initial operationalizations of emerging risks.

- Standardize operationalization of social impacts using qualitative and quantitative evaluations to track the consequences of AI to people and society.

- Mandate external audits, particularly for highly capable or impactful AI systems.

- Embed risk evaluations into the regulation of AI deployment, clarifying the requirements that must be met before broad deployment.

Evaluating impacts in Systems and Society

Evaluating the Social Impact of Generative AI Systems in Systems and Society correctly views AI systems as socio-technical. It divides the evaluations into two broad categories: measurements of the base AI system separate from society, and measurements of society. Evaluations of the base AI system consists of seven categories (e.g., “bias”, “privacy and data protection”, “environmental costs”), each of which is associated with a high-level description of the risk and evaluations. This taxonomy summarizes and clarifies a host of research, serving as an excellent reference for any AI developer.

While the base system evaluations are helpful, the more novel contribution of the paper is defining different impacts to People and Society, and proposing evaluations. They provide a cogent summary of the main societal impacts currently in discussion, including impacts on the labor and economy, enhancing inequality and concentrating power. Overall, this single paper provides a helpful taxonomy and reference to a range of risks and mitigation approaches. Of course, this single paper cannot do complete justice to the complexity of each area, and many of the proposed evaluations are difficult to operationalize in practice. However, these complexities are real and many evaluations need research to better define, both at a technical and societal level.

Evaluating Extreme Risks

Model evaluation for extreme risks has a different focus. This paper recognizes that as AI system capabilities improve, it is plausible that these systems will pose “extreme risks”, by which the authors mean risks that have a huge scale of impact (e.g., thousands of lives lost or billions of dollars of damage), or significant disruption to the social order (e.g., disempowerment of governments or creation of inter-state war). To evaluate whether model’s pose such extreme risk, they define capabilities that are likely precursors of those significant impacts, which include behaviors like “deception” and “self-proliferation”. Evaluations for these capabilities are themselves divided into two categories: “(a) whether a model has certain dangerous capabilities, and (b) whether it has the propensity to harmfully apply its capabilities (alignment).” Each of these require novel approaches for evaluations, which are nicely represented by

The authors then describe where evaluations show up in an AI system’s lifecycle, from responsible training and development to transparency and reporting. Similar to the previous paper, they do not define any particular evaluation in detail. Instead, they describe desirable qualities of any evaluation - that they must be comprehensive, interpretable, and safe.

Finally, they describe some hazards related to conducting and reporting evaluations. These include advertising dangerous capabilities, accelerating race dynamics, and creating a false sense of safety if evaluations are not robust enough.

Why does this matter?

Model evaluation has been a cornerstone of machine learning (ML) since the start. But with AI becoming more general, we need to quickly adapt our understanding of the risk landscape. Building new evaluations is crucial for developers to apply appropriate safeguards, for organizations to make informed decisions, and for governments to set enforceable regulations.

Making these evaluations commonplace requires research advancement, agreement from diverse stakeholders, and societal-scale change management. These are big tasks! But having a clear classification of the risk landscape helps to coordinate efforts across organizations by creating a shared understanding of what’s important.

How is it relevant to AI Governance?

AI Governance is about setting the rules for how AI is developed and used. While both papers focus on evaluations, they highlight very different risks. So, which risks should organizations prioritize?

Well, it depends. Organizations working on the cutting edge of AI have a greater responsibility to evaluate extreme risks. But organizations deploying powerful new AI systems in society should focus more on impacts to people and society.

However, we challenge the notion that we should only focus on a subset of risks. Huge amounts of money are flowing into AI, and it's essential to ask how much should be dedicated to building strong evaluation systems. The risks described in these papers could inspire governments to require a wider range of evaluations, changing the cost/benefit analysis for the private sector. This, combined with daily advancements in evaluation technologies that lower their costs, justifies more focus on rigorous evaluation requirements in AI systems' development and deployment.

As we continue to unlock AI's vast potential, having robust, thoughtful, and comprehensive evaluation systems is not a luxury, but a necessity. At Credo AI, we're committed to helping on this critical journey. Join us as we navigate this evolving landscape, transforming how we approach AI governance for a safer and more equitable future.

About Credo AI

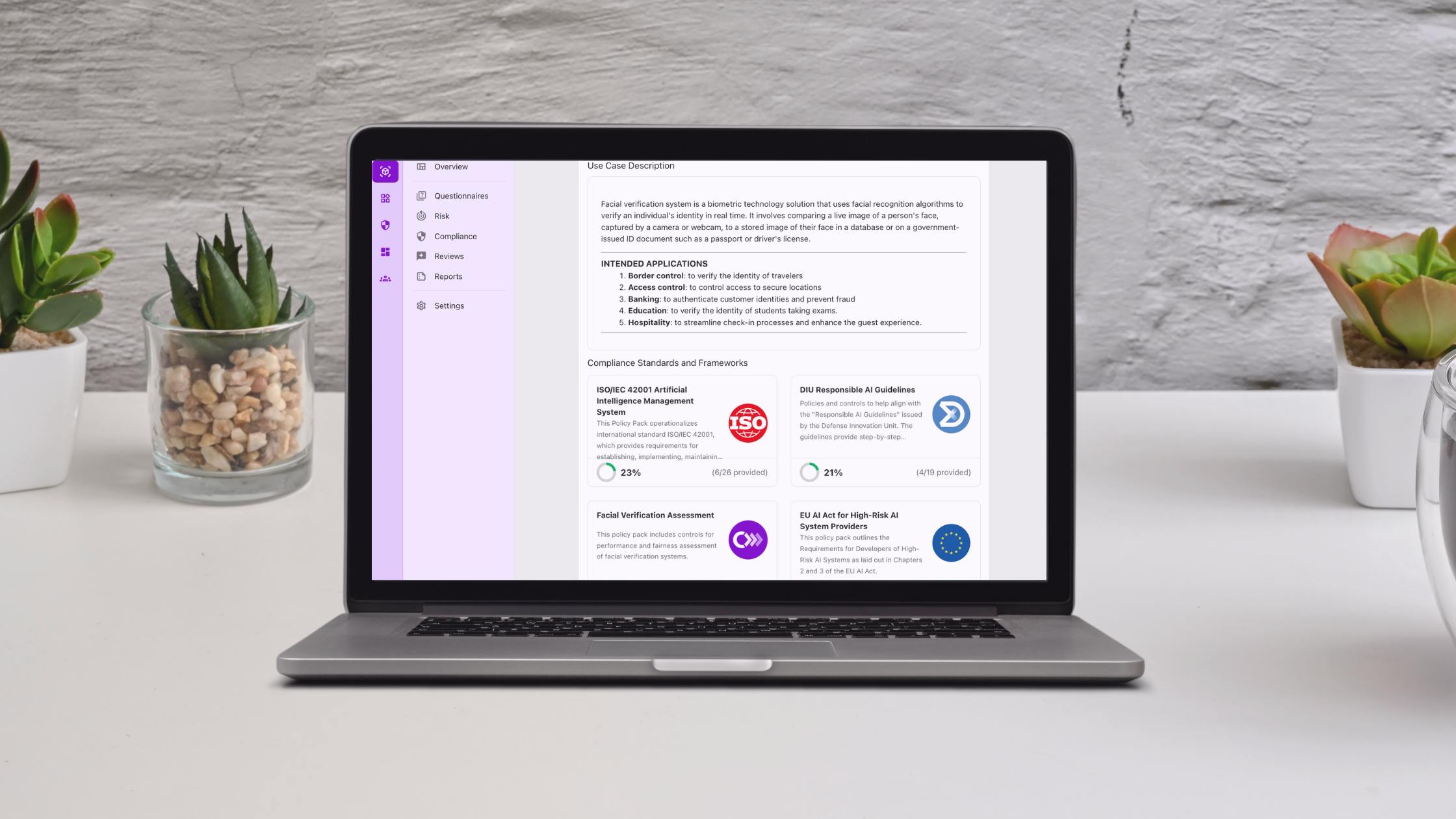

Credo AI is on a mission to empower enterprises to responsibly build, adopt, procure and use AI at scale. Credo AI’s cutting-edge AI governance platform automates AI oversight and risk management, while enabling regulatory compliance to emerging global standards like the EU AI Act, NIST, and ISO. Credo AI keeps humans in control of AI, for better business and society. Founded in 2020, Credo AI has been recognized as a CBInsights AI 100, Technology Pioneer by the World Economic Forum, Fast Company’s Next Big Thing in Tech, and a top Intelligent App 40 by Madrona, Goldman Sachs, Microsoft and Pitchbook.

Ready to unleash the power of AI through governance? Request a demo today!

DISCLAIMER. The information we provide here is for informational purposes only and is not intended in any way to represent legal advice or a legal opinion that you can rely on. It is your sole responsibility to consult an attorney to resolve any legal issues related to this information.

.png)