What are Standards?

In its simplest form, standards provide a common language on minimum expectations for what a product or a service should and should not do. Standards help ensure consistency and can facilitate global alignment and interoperability. Standards can be voluntary or mandatory, playing an important role in the regulatory landscape.

Standards are not new; the International Organization for Standardization (ISO) was created in 1947, and has published over 25,000 standards covering new technologies and products. International, globally interoperable standards are common in industries such as manufacturing or healthcare, which have established minimum requirements for the health, safety, and security of products and services.

Why are standards for AI systems important?

Standards for AI systems align with what we are measuring and provide a comparable baseline for AI model evaluation. Standards help establish common definitions for AI governance practices such as risk management, acceptable accuracy or robustness metrics, and the definition of “human oversight” for AI systems.

The global interoperability of standards is what gives them the greatest value to businesses - consumers can trust that products and services created anywhere in the world have been designed and developed in adherence to the same set of basic principles and requirements. Standards provide businesses with a degree of certainty that their design process is adequate in comparison with their industry peers and that they are considered “safe” by regulatory bodies. This, in turn, inspires trust amongst consumers in different geographic markets.

Various standards can serve as the basis for “trustworthy design.” Two critical standards for the enterprise are ISO 42001 and the NIST AI RMF.

ISO/IEC 42001

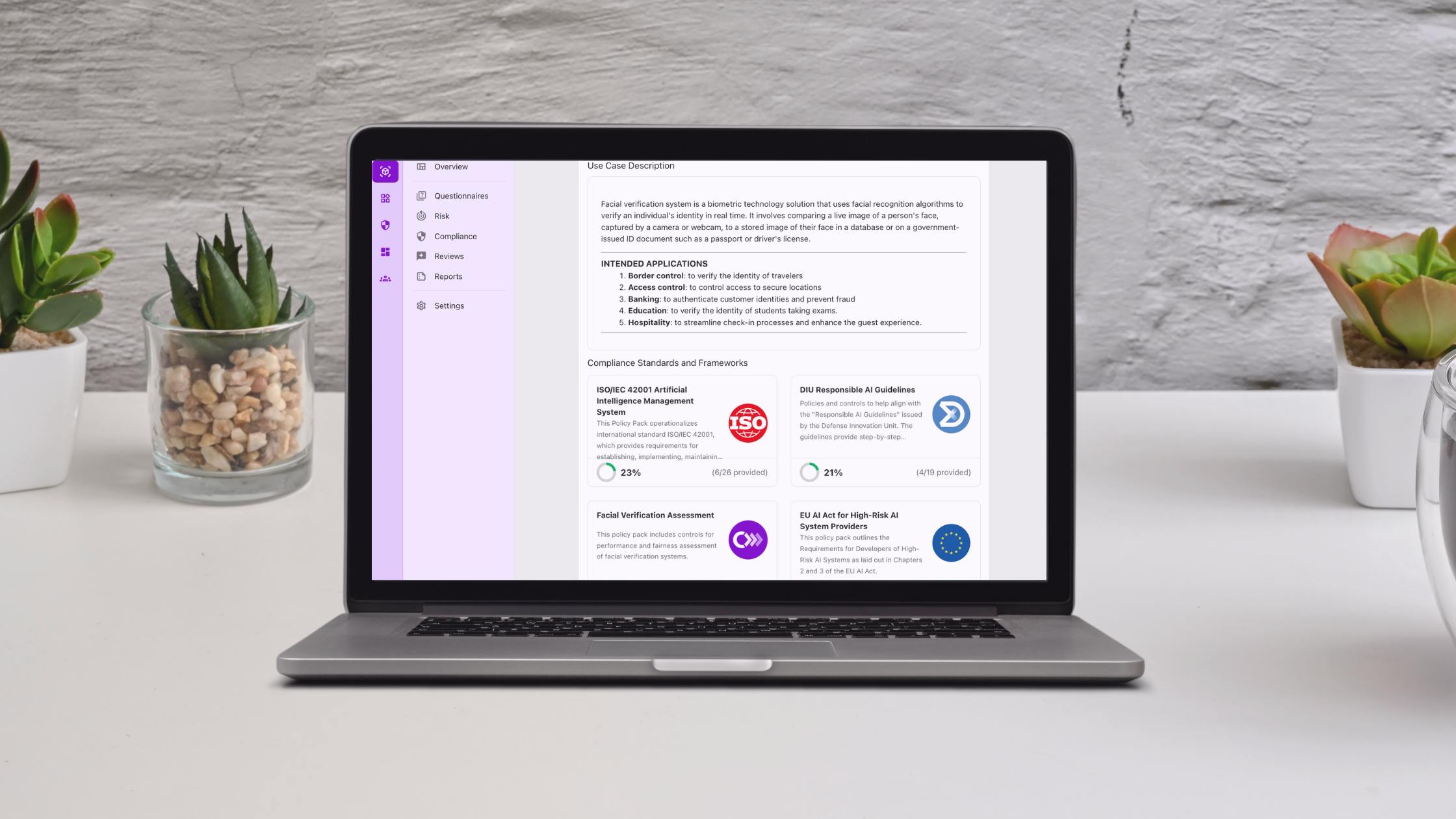

The ISO/IEC 42001 is a globally recognized management system standard that specifies requirements and provides guidance for establishing, implementing, and continually improving an Al management system. At its core, ISO/IEC 42001 establishes international foundational practices for organizations to develop AI responsibly and effectively while promoting public trust in AI systems with a standard that can eventually be certified through a third-party audit.

Learn more about ISO 420001 in our blog post “Credo AI Launches the Most Comprehensive Governance Solution to Support ISO 42001 Adoption”.

NIST AI Risk Management Framework

In the U.S., the National Institute of Standards and Technology (NIST) published an AI Risk Management Framework that guides organizations in managing and mitigating AI-related risks in a structured, measurable, and flexible manner. This framework is sector, use case, and law and regulation agnostic, providing organizations with a horizontal foundation for understanding mapping, measuring, managing, and governing AI risks.

Learn everything you need to know about the NIST AI RMF in our blog, Mastering AI Risks: Building Trustworthy Systems with the NIST AI Risk Management Framework (RMF) 1.0.

For enterprises developing and deploying AI systems, it is important to recognize that emerging standards and regulations, including the EU AI Act, share common elements such as implementing an AI risk management system, keeping technical documentation, and incorporating human oversight.

What is the role of standards in the context of the EU AI Act?

The EU AI Act depends on the development of “harmonized standards” to operationalize its requirements and determine “what good looks like.” The requirements within the EU AI Act were designed to be high-level, utilizing the standardization process to detail the operational requirements. In this context, standards offer greater flexibility: updating a standard often proves less cumbersome than amending legislation, thereby allowing for a more agile response to evolving AI technologies and capabilities.

The European Committee for Standardization (CEN), the European Committee for Electrotechnical Standardization (CENELEC), and the European Telecommunications Standards Institute (ETSI) are working –in partnership with international standards organizations ISO, IEC, and ITU– to develop the following standards for EU AI Act compliance:

- risk management for AI systems;

- governance and quality of datasets used to build AI systems;

- record keeping through logging capabilities by AI systems;

- transparency and information provisions for users of AI systems;

- human oversight of AI systems;

- accuracy specifications for AI systems;

- robustness specifications for AI systems;

- cybersecurity specifications for AI systems;

- quality management systems for providers of AI systems, including post-market monitoring processes; and,

10. conformity assessment for AI systems.

While these standards are not expected to be finalized until the end of 2025, enterprises that intend to make their systems available in the EU don’t have to wait. Credo AI can help enterprises map, measure, and mitigate risk by adopting best practices for AI governance today.

Conclusion

Choosing the right standard will depend on your enterprise needs and where you plan to make your AI system available. Adopting an internationally recognized standard such as ISO/IEC 42001 can help you set the foundations for risk management and provide a building block towards adopting sector-specific standards and forthcoming mandated EU AI Act standards.

Enterprises should focus on what they can do today to ensure they are designing and deploying AI systems that are transparent and auditable and that ultimately continue to enable enterprise success. Adopting quality record-keeping is the first step to help ensure the quality and longevity of enterprise-wide AI adoption for years to come.

- Reach out to us to adopt the NIST AI RMF or the ISO/IEC 42001.

- Talk to our experts to learn how we can support your organization in adopting AI governance!

DISCLAIMER. The information we provide here is for informational purposes only and is not intended in any way to represent legal advice or a legal opinion that you can rely on. It is your sole responsibility to consult an attorney to resolve any legal issues related to this information.

.png)